From AI PoCs to Production: A Playbook that Works

It's 2026 and nearly every large organisation has run an AI proof of concept. The demos are impressive. Stakeholders nod along. A business case gets socialised. And then, somewhere between the excitement and the enterprise architecture review, the whole thing stalls.

The pattern is so common it has become its own cliché: PoC purgatory.

The problem isn't ambition or even capability. It's that most organisations treat the path from prototype to production as a single leap, when it's actually a series of structured gates. Miss one and you're stuck. Nail them in sequence and you can move from demo to deployment in weeks, not quarters.

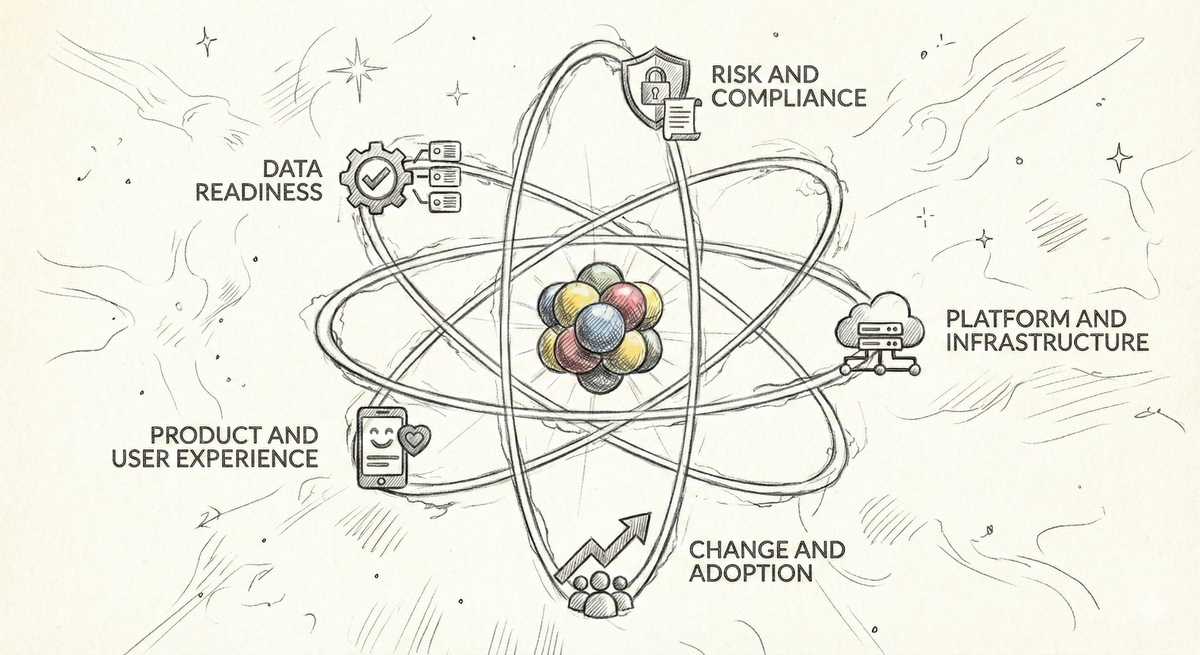

The 5 gates from PoC to production

Think of these as quality gates, not phases. Each one answers a specific question, and you don't move forward until it's resolved.

1. Data readiness

The question: Is the data available, governed and fit for purpose?

Most PoCs cheat on data. They use curated samples, skip access controls and ignore lineage. In production, that won't fly.

The first thing to get right is your data contracts. You need to know exactly which sources feed the model, who owns them, and what the freshness and quality SLAs look like. If the answer to any of those questions is "we'll figure it out later", you're not ready.

Next, assess data sensitivity early. If the pipeline touches PII, financial data or regulated content, that needs to be mapped and documented before anyone starts building. Retrofitting data governance onto a live system is painful and slow.

Finally, build the plumbing before the model. Ingestion, transformation and storage patterns need to be production-grade from day one. A RAG pipeline that queries a shared drive might impress in a demo, but it won't survive its first security review. Get the data platform right and the AI layer on top becomes dramatically simpler.

2. Risk and compliance

The question: Can we explain, govern and defend this system?

AI risk isn't hypothetical any more. Regulators, boards and customers are all paying attention.

Start by defining your responsible AI guardrails. What can the model do? What can't it do? What topics or actions are explicitly out of scope? Document all of this clearly, because when something goes wrong (and it will), you need to show that boundaries were set and enforced.

Observability needs to be baked into the pipeline from the beginning, not bolted on later. Log inputs, outputs and decisions at every step. If you can't audit a response, you can't defend it to a regulator, a board or a customer.

Perhaps most importantly, engage your risk, legal and compliance teams early and treat them as collaborators, not approvers. The fastest way to kill a production deployment is a last-minute legal review that uncovers issues nobody thought to flag six months ago. Bring these stakeholders into the design process and you'll move faster, not slower.

3. Platform and infrastructure

The question: Will this run reliably at scale, within our security and cost boundaries?

The gap between a notebook and a production workload is enormous. This gate is about closing it.

The hosting pattern decision is foundational. Are you consuming a managed API like Azure AI Foundry, Google Vertex or Amazon Bedrock? Self-hosting an open-weight model for data residency or cost reasons? Running a hybrid setup? Each approach carries real trade-offs across cost, latency, data sovereignty and vendor lock-in. There's no universal right answer, but it's important to make the decision deliberately.

Automation is non-negotiable. You need CI/CD pipelines for model artefacts, infrastructure as code for the serving layer, and automated testing for prompt regressions. If a developer has to SSH into a box to deploy a model update, you're not production-ready.

Cost controls deserve substantial attention. Token-based pricing models can spiral fast, especially once real users start hitting the system at scale. Implement budgets, throttling and usage dashboards before you go live. Discovering a $50,000 monthly bill three weeks after launch is not a conversation anyone wants to have.

4. Product and user experience

The question: Does this actually solve the user's problem better than the alternative?

A technically sound AI system that nobody uses is still a failure.

The mistake most teams make here is focusing on model output in isolation, rather than the end-to-end user journey. Where does the AI fit into an existing workflow? What does the user see when the model is wrong? What happens when they disagree with a recommendation? These questions matter far more than benchmark scores.

Every AI feature also needs a graceful degradation path. Models will occasionally be slow, unavailable or uncertain, and when that happens, the user should still be able to complete their task. If your AI feature has no fallback, you've built a single point of failure into a business process.

Finally, measure outcomes rather than accuracy in the abstract. Task completion rate, time saved and user satisfaction are the metrics that tell you whether the system is actually delivering value. An F1 score of 0.95 means nothing if users are copying the output into a spreadsheet and manually fixing it before they can use it.

5. Change and adoption

The question: Will the organisation actually embrace this?

This is where most technically successful projects quietly fail.

Adoption doesn't happen by default. You need champions, people within each team who believe in the tool and will advocate for it when the initial excitement fades. Find them early, equip them with the context and collateral they need, and give them a direct line back to the product team.

Training alone won't change behaviour either. A one-hour webinar might tick a box, but it won't shift how people work day to day. The far more effective approach is to embed the AI directly into existing tools and processes so that using it becomes the path of least resistance. If adoption requires people to change their habits, make the new habit easier than the old one.

Lastly, build feedback loops from the start. Production AI systems need continuous improvement, and the best signal for what to improve comes from the people using the system every day. Create lightweight channels for users to flag issues, suggest improvements and share wins. This keeps the system getting better and keeps users feeling invested in its success.

Reference architecture patterns (and when not to use them)

Not every AI use case needs the same architecture. Here are the three patterns we see most often, along with honest guidance on when they're the wrong choice.

Retrieval-Augmented Generation (RAG)

Best for: Grounding model responses in your own data, such as internal knowledge bases, policy documents or customer records.

When not to use it: If your data changes infrequently, is small enough to fit in context, or if the retrieval step adds latency that the use case can't tolerate. Also avoid RAG when you need deep reasoning over structured data; a well-designed API or database query is often simpler and more reliable.

Tool use (function calling / agentic patterns)

Best for: Letting the model take actions, such as querying APIs, writing to databases, triggering workflows or orchestrating multi-step processes.

When not to use it: If the actions are high-risk and irreversible without robust human-in-the-loop controls. If the tool landscape is unstable or poorly documented. Agentic patterns are powerful but brittle when the underlying APIs lack clear contracts and error handling.

Multi-agent systems

Best for: Complex workflows where multiple specialised AI agents collaborate to complete a task, such as research and report generation, multi-step approval processes, or orchestrating actions across several business systems.

When not to use it: If the task can be handled reliably by a single model call or a simple chain. Multi-agent systems introduce coordination overhead, latency and debugging complexity that isn't justified for straightforward use cases. They also require robust observability across agents, clear handoff protocols and well-defined failure modes. If you can't articulate why one agent isn't enough, you probably don't need more than one.

SLAs for AI: latency, quality and cost

Traditional software has well-understood SLA frameworks. AI systems need their own, covering three dimensions that are often in tension with each other.

| Dimension | What to measure | Example target |

|---|---|---|

| Latency | End-to-end response time from user input to rendered output | P95 < 3 seconds for interactive use cases; < 30 seconds for batch/async |

| Quality | Task-specific accuracy, groundedness, hallucination rate | > 95% groundedness for RAG; < 2% hallucination rate on critical outputs |

| Cost | Cost per query, cost per user, total monthly spend | < $0.05 per query for high-volume use cases; monthly budget cap with alerts |

The key insight is that these three dimensions form a triangle. You can optimise for two at the expense of the third. A faster, higher-quality response costs more. A cheaper response is either slower or lower quality. Make these trade-offs explicit and visible to stakeholders from the start.

How to set AI SLAs

- Baseline against the current process. If the AI replaces a manual task that takes 20 minutes, a 10-second response with 90% accuracy is a massive win. Set SLAs relative to the alternative, not to perfection.

- Differentiate by use case tier. Not every AI feature needs the same SLA. A customer-facing chatbot needs tighter quality controls than an internal summarisation tool.

- Monitor and iterate. AI SLAs aren't set-and-forget. Model performance drifts, usage patterns shift and costs fluctuate. Build dashboards and review them monthly.

The bottom line

The gap between AI demo and AI production isn't a technology problem. It's a readiness problem. Organisations that treat the journey as a structured sequence of gates, rather than a single heroic push, ship faster and with fewer surprises.

The five gates (data, risk, platform, product, change) aren't revolutionary. They're just disciplined. And discipline, not novelty, is what separates organisations that talk about AI from those that run on it.