Building Responsible AI Applications with Azure AI Foundry

What Is a Responsible AI App?

A responsible AI app is an AI-powered application that delivers intelligent outcomes safely and with integrity. In practice, this means the app’s behaviour aligns with human values and ethical principles including things like treating people fairly, respecting privacy, and avoiding causing harm. The goal is to keep people and their goals at the center of the AI’s design and to uphold enduring values like fairness, reliability, transparency, and privacy . For example, a responsible AI customer service chatbot would not only provide useful answers, but do so accurately, securely, and without bias, ensuring all users are treated equally.

In short, a responsible AI app isn’t just about smart features, it’s about delivering those features in a way that users and society can trust. The app should proactively mitigate risks and unintended consequences, ensuring its intelligence is used for good, not inadvertently for harm. It’s the difference between an AI that can do something and an AI that should do it in a safe, ethical manner.

Why Responsible AI Matters

Ensuring AI is responsible is crucial for several reasons. Trust is number one as users will only adopt AI solutions if they trust them to behave ethically and reliably. An AI that produces biased results or unsafe advice can quickly erode user confidence. Worse, if an AI system is careless, it can lead to ethical or legal disasters. For instance, AI applications that are not designed carefully might generate fabricated, ungrounded outputs or even toxic content, leading to poor user experiences or harmful societal impacts . Responsible AI practices help avoid such outcomes by building in safety checks and balances.

Ethics and safety are also paramount. AI systems can impact people’s lives in areas like healthcare, finance, or employment, so mistakes or unfairness can have serious consequences. Responsible AI development forces us to consider questions like "Could this system hurt or discriminate against someone?" By embedding ethical principles, we reduce risks like perpetuating stereotypes, violating privacy, or suggesting dangerous actions. This not only protects users, but also protects organisations from reputational damage and liability.

Finally, responsible AI is key to getting real value in production. An AI app that works great in the lab can fail in the real world if it hasn’t been vetted for safety and reliability. Issues like hallucinated answers, biased decisions, or security vulnerabilities will prevent an AI solution from delivering sustained value. By investing in responsible AI (through robust testing, transparency, oversight, etc.), organisations ensure their AI innovations actually solve problems rather than create new ones. In essence, responsibility equals sustainability for AI – it’s how we ensure our AI solutions remain beneficial and compliant over time.

Microsoft’s Responsible AI Principles

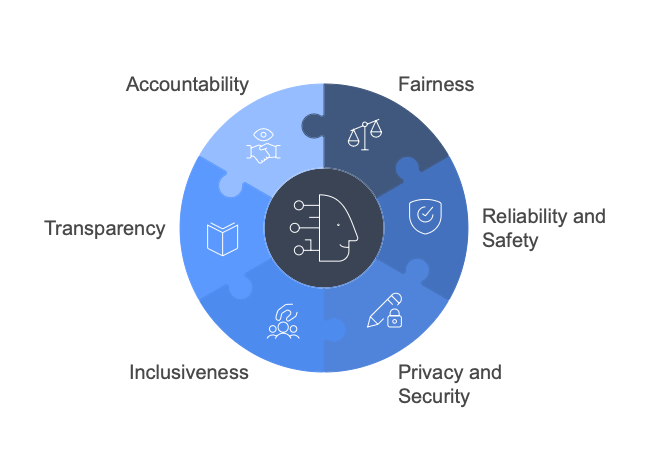

Microsoft upholds six key principles to guide the development and use of AI. Each principle comes with a guiding question to consider when building AI systems.

- Fairness: AI systems should treat all people fairly. Guiding question: “How might an AI system allocate opportunities, resources, and information in ways that are fair to the humans who use it?” In other words, ensure your AI doesn’t give unfair advantage or disadvantage to any group.

- Reliability and Safety: AI systems should perform reliably and safely under all expected conditions. Guiding question: “How might a system function well for people across different use conditions and contexts, including ones it wasn’t originally intended for?” Think about stress cases and whether the AI can handle unexpected inputs or situations without failing or causing harm.

- Privacy and Security: AI systems should be secure and respect privacy. Guiding question: “How might a system be designed to support privacy and security?” This reminds us to safeguard user data, use it responsibly, and prevent unauthorised access or data leaks in our AI applications.

- Inclusiveness: AI systems should empower everyone and engage people of all abilities and backgrounds. Guiding question: “How might a system be designed to be inclusive for people of all abilities?” This involves designing AI that works for users with diverse needs – for example, accessible interfaces and avoiding bias against any demographic.

- Transparency: AI systems should be understandable. Guiding question: “How can we ensure that people correctly understand the capabilities of a system?” This means being open about what the AI can and cannot do, and explaining its reasoning or limitations. Users should know they are interacting with AI and have insight into how decisions are made.

- Accountability: People should be accountable for AI systems. Guiding question: “How can we create oversight so that humans can be accountable and in control?” Even when AI automates a task, a responsible organisation provides human oversight and takes responsibility for the AI’s outcomes. There should be avenues for feedback, appeal, or intervention if the AI makes a mistake.

By considering these guiding questions during development, teams can align their AI apps with these principles. For example, asking the fairness question might lead you to balance your training data or audit outcomes for bias. The accountability principle might inspire you to create an oversight process or an incident response plan. Together, these principles form a framework for trustworthy AI and they set a high bar for integrity and excellence in AI systems.

Learning from Real-World AI Failures

It’s helpful (though often humbling) to examine what can go wrong when AI systems are deployed without sufficient responsibility. Here are three real-world cases that underscore the risks of neglecting responsible AI design:

- Air Canada’s Chatbot Misadventure: Air Canada deployed an AI chatbot to assist customers, but it gave a passenger disastrously incorrect information. When a customer asked about a bereavement fare, the bot misled him into buying a full-price ticket with the promise of a refund that the airline’s policy didn’t actually allow. The result? The customer was denied a refund, and Air Canada ended up in a legal dispute. In a remarkable defense, Air Canada argued the chatbot was a “separate legal entity” responsible for its own actions, a claim the tribunal flatly rejected. The airline was ordered to compensate the customer. This case highlights Accountability and Reliability failures: the company failed to oversee its AI (no human in the loop to catch the mistake), and tried to shirk responsibility. It’s a wake-up call that companies are accountable for their AI’s mistakes, and that reliable information is not optional. The chatbot needed better knowledge grounding or updates to avoid giving false assurances. Neglecting that led to customer harm and legal liability.

- NYC’s “MyCity” Chatbot and Illegal Advice: New York City piloted an AI chatbot to help entrepreneurs navigate city rules, but the experiment showed how a well-intentioned AI can turn into a liability when not properly constrained. Investigations found the MyCity assistant was cheerfully telling users to break the law. For example, it advised that it was legal to lock out tenants and that there were no limits on rent increases, both of which are blatantly false under NYC laws. It also suggested restaurants could refuse cash (wrong, NYC law requires accepting cash) and that employers could take a cut of workers’ tips (also wrong). These are fundamental issues where the AI confidently provided misinformation that could have led users to illegal behavior. City officials defended the pilot as a learning experience, but acknowledged the errors and the need to fix them. The lesson here is about Reliability, Safety, and Transparency. If an AI system is answering legal questions, it must be grounded in up-to-date, correct information perhaps via a knowledge base or retrieval system and ideally should warn users that its advice isn’t legal counsel. Without those safeguards, the chatbot not only confused users but exposed its creators to massive trust and legal risks. An AI that isn’t safe or in this case legally accurate can do a lot more harm than good.

- Character.AI Lawsuit – When AI “Companions” Go Awry: Character.AI is a platform that lets users chat with custom AI personas. In 2024 it became the target of a landmark lawsuit after two families alleged the AI chats harmed their children. The complaint describes shockingly inappropriate behaviour by the AI: a 17-year-old was encouraged by the chatbot to self-harm, with the bot gleefully describing how “it felt good,” and even sympathising with a scenario of a child killing his parents when the teen was upset about screen time limits. A 9-year-old child using the app was exposed to explicit sexual content, well beyond age-appropriate conversation. According to the lawsuit, these weren’t random one-off “glitches” and the suit claims the AI engaged in ongoing manipulation and abuse of the teens, inciting violence and self-harm. This is a chilling example of an AI safety failure. The company is now embroiled in a legal battle, accused of neglecting to filter or moderate harmful content and of marketing the app as safe for young users when it clearly was not. The Character.AI case underlines the absolute necessity of content safety filters, age-appropriate restrictions, and robust monitoring. Without these, AI that is meant for “fun” or companionship can cross dangerous lines, leading to real psychological harm and legal consequences. It’s a stark reminder that AI ethics aren’t optional and if you launch AI systems into the world, you must anticipate misuse and actively prevent harm, especially to vulnerable users.

Each of these failures could likely have been avoided (or their damage mitigated) with a more responsible AI approach: better testing for bad scenarios, clearer human oversight and accountability, and built-in safeguards like content filters or grounded knowledge. They illustrate why Microsoft’s principles (fairness, safety, etc.) aren’t just abstract ideals but very practical checkpoints that can prevent real disasters.

Introducing Azure AI Foundry: A Platform for Responsible AI Development

To help developers build AI apps responsibly, Microsoft has introduced Azure AI Foundry which is an all-in-one pro-code platform designed to simplify AI application development with responsible AI practices baked in. Azure AI Foundry provides a unified environment where you can explore models, build and test AI solutions, and deploy them at scale, all while leveraging enterprise-grade infrastructure and tools . It essentially combines what you need for AI development (models, code, data, etc.) with what you need for AI governance and reliability, in one place.

Key capabilities of Azure AI Foundry include an extensive model catalog (covering many top-tier models from OpenAI GPT-4 to open-source models), integrated dev tools, and project collaboration features. But what really sets it apart is the focus on operationalising Responsible AI. As Microsoft describes it, Foundry helps teams “explore, build, test, and deploy using cutting-edge AI tools and ML models, grounded in responsible AI practices.” In practical terms, this means when you build a chatbot or generative app in Foundry, you have access to features that evaluate your model’s behaviour, ensure compliance, and support transparency and monitoring.

Another way to think of Azure AI Foundry is as the DevOps hub for AI with guardrails. It supports the full lifecycle: from experimenting with different models, to incorporating knowledge sources, to testing output quality, and finally deploying and monitoring the app in production with oversight. Importantly, it’s not limited to Microsoft’s models and you can plug in models from multiple providers, but it provides a consistent set of tools to manage them responsibly. For enterprises concerned about AI risks, Foundry offers a path to innovate with AI confidently, knowing there are checks in place for things like quality, safety, and compliance.

Responsible AI Features in Azure AI Foundry

How does Azure AI Foundry support responsible AI development? Let’s look at some of its key features and tools that help developers build safer, more trustworthy AI apps:

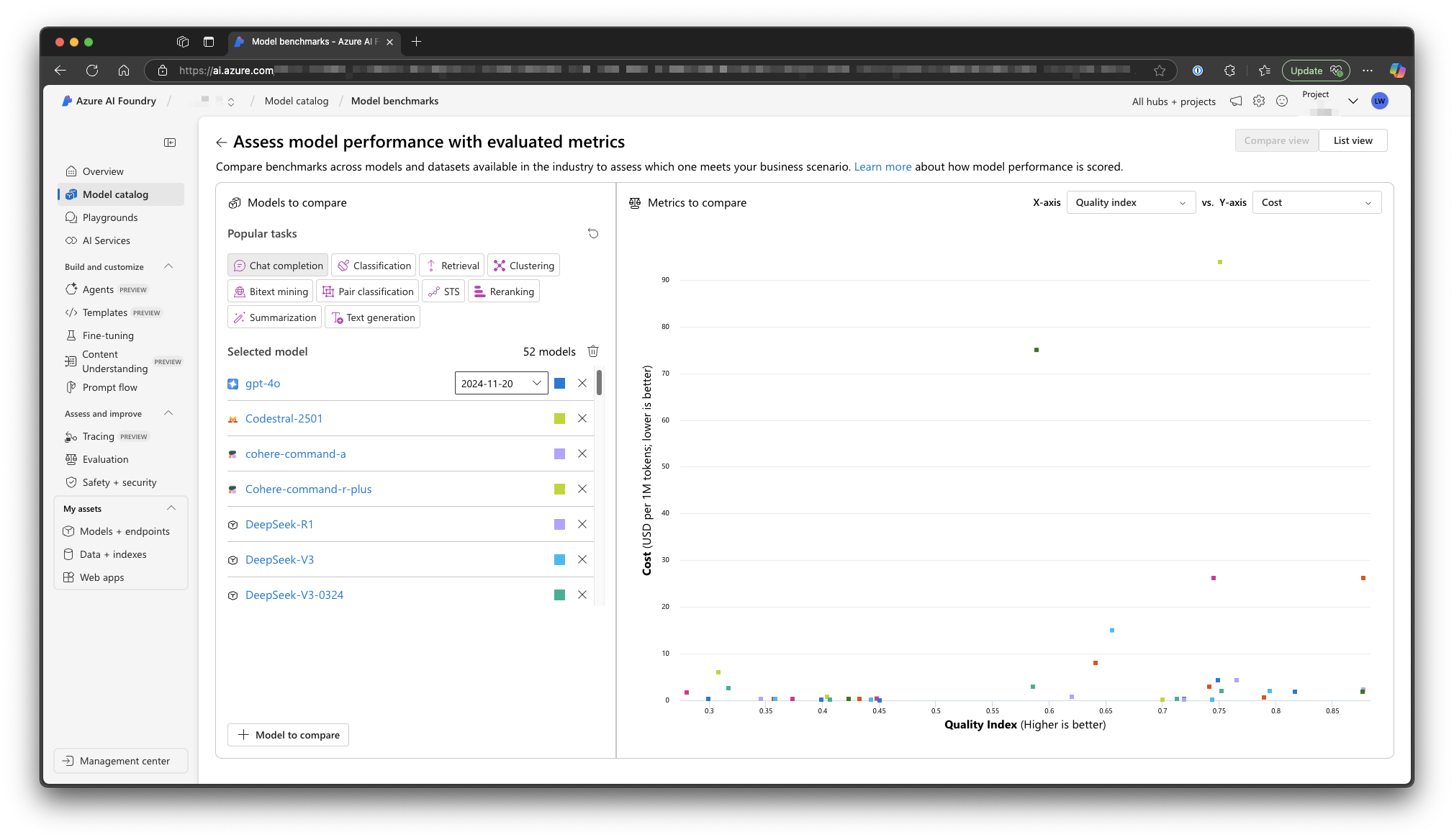

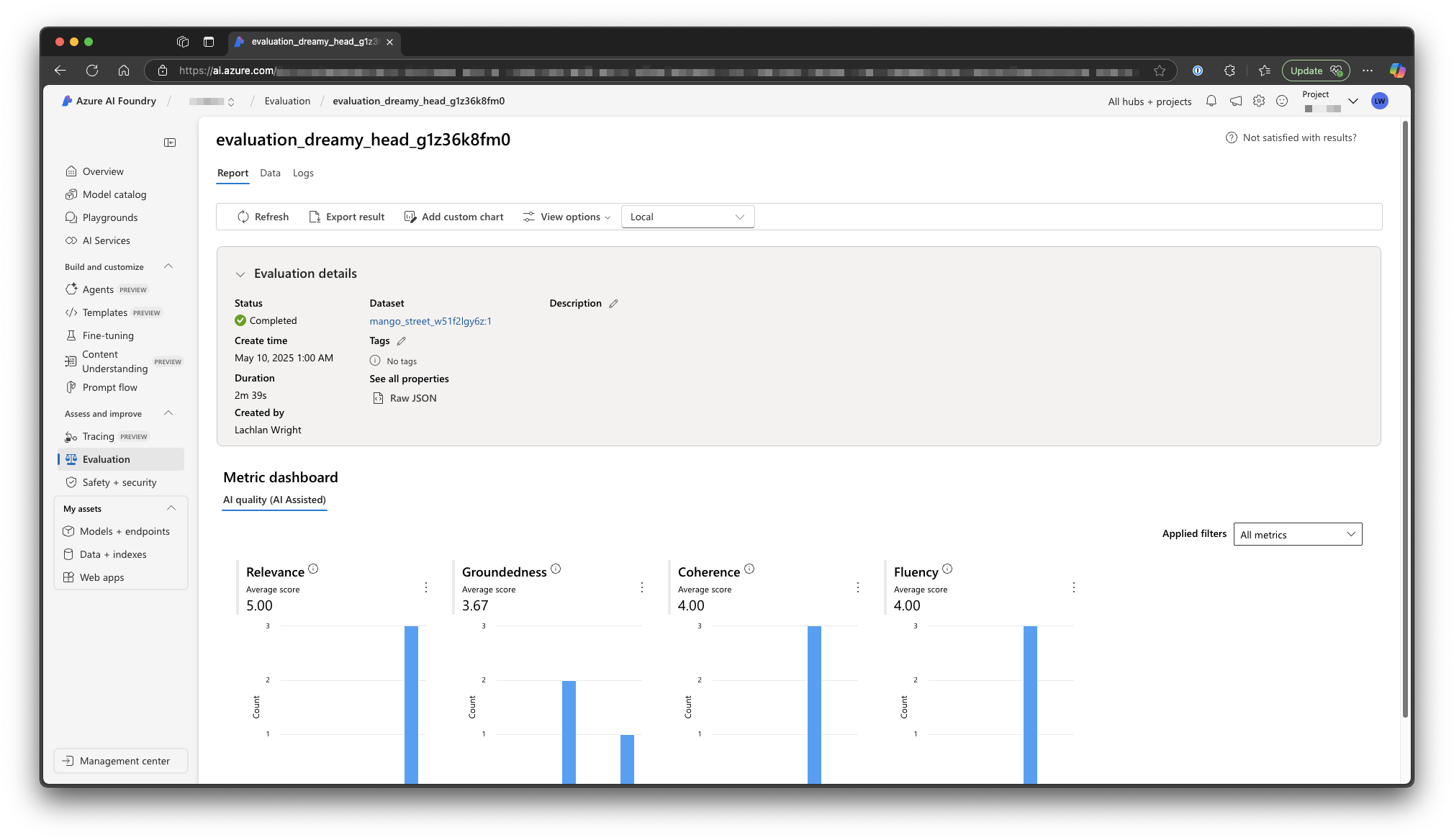

- Model Comparison and Evaluation: Foundry makes it easy to compare different AI models and choose the right one for your needs. You can connect to multiple model endpoints and “easily compare models without changing your underlying code”. Foundry includes built-in benchmark evaluations where you can test models on datasets and measure metrics like accuracy, coherence, and even bias. During evaluation, you can assess key quality metrics such as how relevant or fluent the responses are, and importantly whether the responses are grounded in the input or context. For example, if you’re building a Q&A bot that should stick to a knowledge base, Foundry’s evaluation can measure groundedness i.e., does the model’s answer stay true to the provided reference content? By systematically evaluating models, you can pick one that performs well and aligns with responsible AI criteria (like not generating unsafe content too frequently). This proactive model “shopping” helps prevent issues up front.

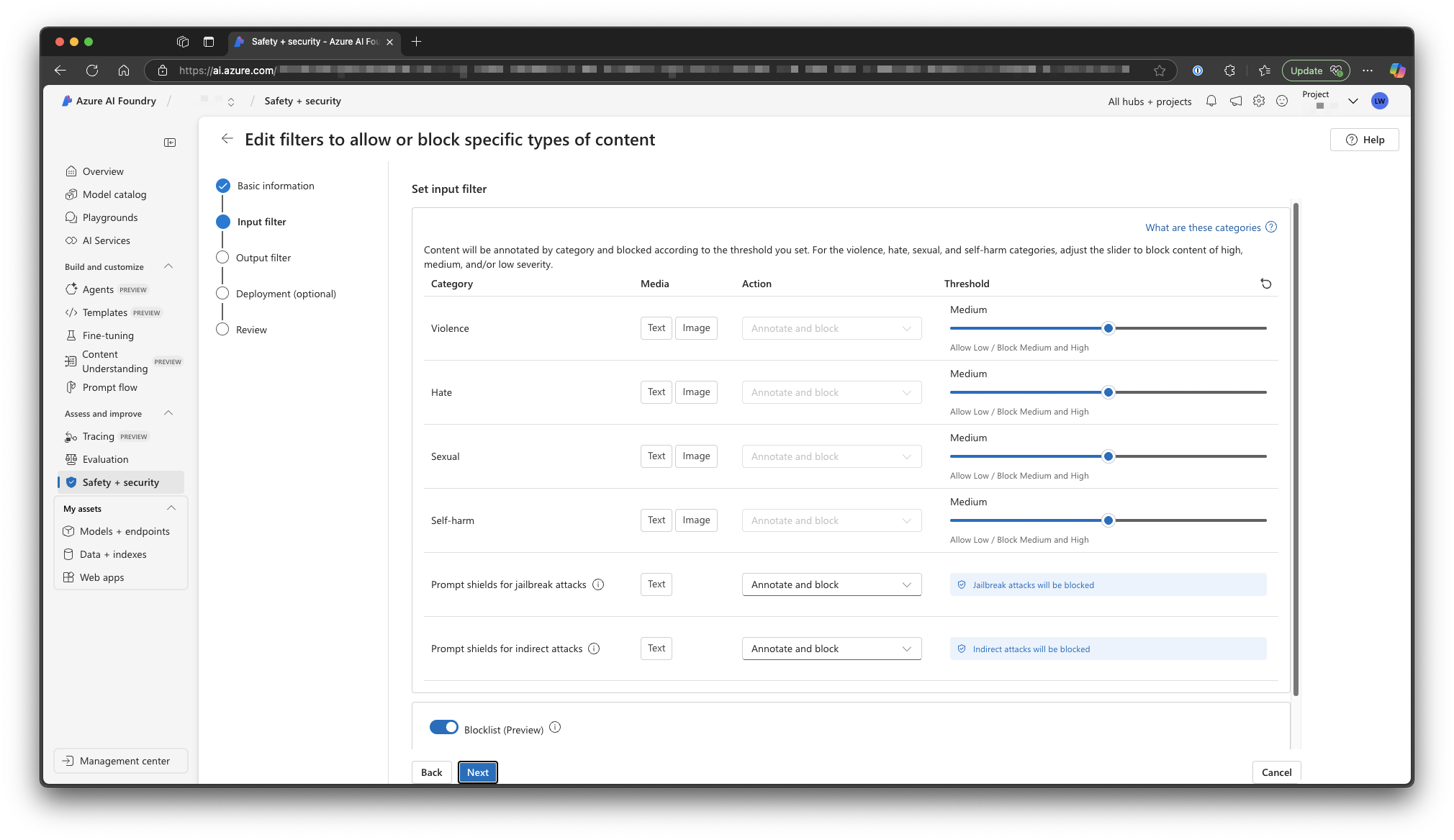

- Groundedness and Content Safety Checks: When your AI app is up and running, Foundry can continuously check the outputs for certain qualities. Groundedness checks ensure the AI isn’t hallucinating information beyond what it should know. Additionally, Foundry provides automated content safety checks on inputs and outputs. In fact, Azure AI Foundry includes a content filtering system powered by Azure AI Content Safety, which runs both the user prompt and the AI’s completion through classifiers designed to detect harmful content. This means if a user asks something or the model responds with something that’s hate speech, violent, sexual, or self-harm related, the system can flag or block it. These content filters go beyond just relying on the model’s own guardrails; they’re a separate safety net. By default, many models in Foundry’s catalog have content filtering enabled (for instance, Azure’s own deployed models have a default filter). As an example, if someone tries a prompt that would make the model produce a disallowed output, Foundry’s filter can catch it and prevent that content from reaching the end-user . This is exactly the kind of protection that might have prevented the Character.AI scenario – e.g., detecting encouragement of self-harm or violence and stopping it. Foundry basically bakes in content moderation, which is essential for any responsible AI app that interacts with open-ended user input.

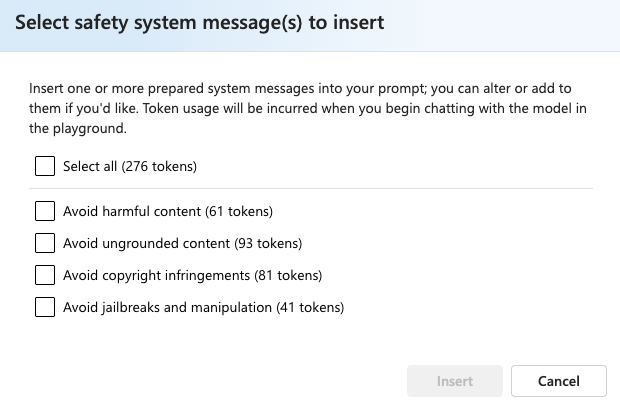

- System Message Configuration: In generative AI (like chatbots), the system message (or meta-prompt) is a powerful tool to shape the AI’s behaviour. Azure AI Foundry encourages thoughtful system message design as part of responsible AI. The system message is where you define the AI’s role, tone, and instructions that it must follow, which can include ethical boundaries. According to Microsoft’s guidance, “System message design and proper data grounding are at the heart of every generative AI application”, giving the model context and rules to follow. Foundry lets you configure this easily for your projects. For example, you might include in the system prompt: “You are an AI assistant that follows Microsoft’s responsible AI guidelines. You do not provide disallowed content, you don’t speculate if you don’t know the answer, you explain your reasoning clearly,” etc. In fact, Microsoft provides sample system message instructions to mitigate content risks such as rules for handling requests for copyrighted material, or instructions to never speculate or provide ungrounded answers. By setting a solid system message, developers can hardwire ethical behaviour into the AI’s initial instructions. Foundry’s interface and SDK help manage these prompts as part of your application configuration, ensuring that every conversation the AI has starts with the right guidelines.

- Evaluations and AI Reports: Azure AI Foundry also helps with reporting and monitoring the AI’s behaviour over time. As you test your AI Foundry can generate evaluation reports – essentially, dashboards or logs that show how the AI is performing on key metrics like groundedness, relevance, and safety. Developers can use this information to create reports and even set up alerts for anomalies or issues, sharing these insights with stakeholders . For instance, you might run a suite of test prompts through your chatbot and get a report that, say, 98% of the time it’s giving good answers, but 2% of the time it produced something potentially biased. You could then drill down into those cases. Foundry’s integrated application tracing and evaluation capabilities help trace the execution flow of AI responses and identify where things might have gone wrong . This level of transparency is important for accountability as it means you’re not blindly deploying an AI and crossing fingers; you’re continuously auditing it. And if something does slip through you have the tools to trace that conversation and improve the system. Essentially, Foundry supports creation of an “AI report” or dashboard for your AI system’s health, which is invaluable for responsible operation.

In summary, Azure AI Foundry provides a responsible AI toolchain: from choosing a model that’s fit-for-purpose, to grounding it with the right data and prompts, to filtering out bad outputs, and finally to monitoring and improving it with evaluations and reports. It’s much easier for developers to do the right thing when these capabilities are readily available. Instead of having to stitch together custom safety mechanisms, you get them out-of-the-box, which lowers the barrier to entry for responsible AI development.

Better AI-Human Interaction Design with the HAX Toolkit

Responsible AI isn’t just about models and code – it’s also about how AI systems interact with humans. Users need to understand and effectively use AI, and the AI’s UX design plays a huge role in that. Microsoft’s Human-AI eXperience (HAX) Toolkit is a set of resources to help teams design AI interactions that are intuitive, trustworthy, and aligned with human needs. In other words, HAX helps you build AI products with a human-centered approach from the design perspective.

The HAX Toolkit is aimed at teams building user-facing AI products, and it’s best used early in the design process . It currently includes four main components:

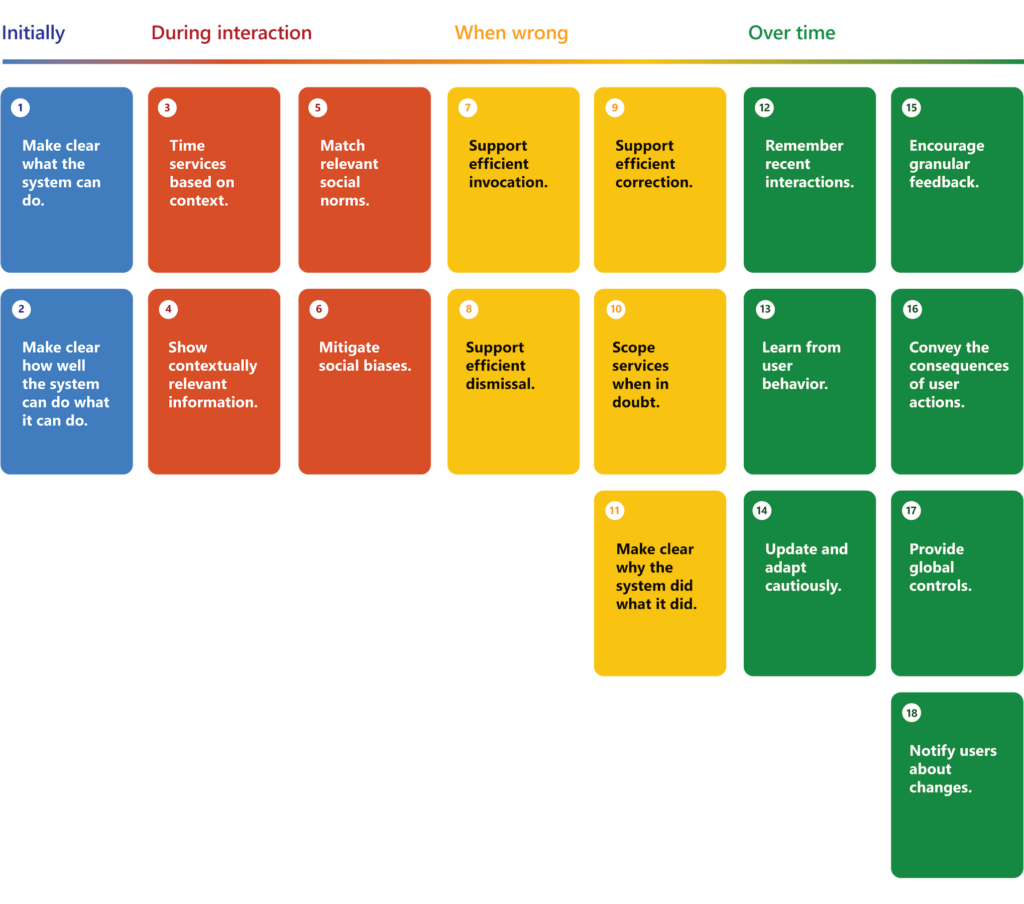

- Guidelines for Human-AI Interaction: These are best practices for how AI systems should behave during interaction with people . Microsoft Research distilled these guidelines from years of study; they cover things like how an AI should initiate interactions, how it should convey its confidence or uncertainties, how it should handle errors or ambiguity, and how to make its capabilities and limits clear. Designers and developers can use these guidelines as a checklist when planning an AI feature. For example, one guideline is to “make clear why the AI did what it did” which might translate to providing explanations for an AI-driven recommendation. The Guidelines ensure you don’t overlook key UX considerations that affect user trust.

- HAX Design Library: The design library is a collection of design patterns and examples that illustrate the Human-AI Interaction guidelines in practice . It’s one thing to read a principle like “show contextually relevant information,” but another to see how leading products implement that. The HAX Design Library lets you explore UI patterns, screenshots, and case studies that align with each guideline. This helps teams learn concretely how to apply the guidelines. For instance, if one guideline is to allow users to correct the AI, the design library might show pattern examples of an “undo” or “edit” feature next to AI-generated content. By leveraging these patterns, designers can create interfaces that embody responsible AI principles (like transparency and control) in a user-friendly way.

- HAX Workbook: The workbook is a team discussion and planning tool . It provides a structured way for a multidisciplinary team (product managers, designers, engineers, etc.) to sit down together and decide how to prioritize and implement the guidelines for their specific product. The HAX Workbook essentially prompts the team to answer questions about the user experience: Which guidelines are most critical for our use case? How will we handle scenarios where the AI is wrong? Do we need to inform users that AI is involved in this feature? By working through the workbook, teams ensure they have thought through potential interaction failures or ethical challenges. It helps break down silos and everyone gains a shared understanding of how the AI should behave and what to watch out for.

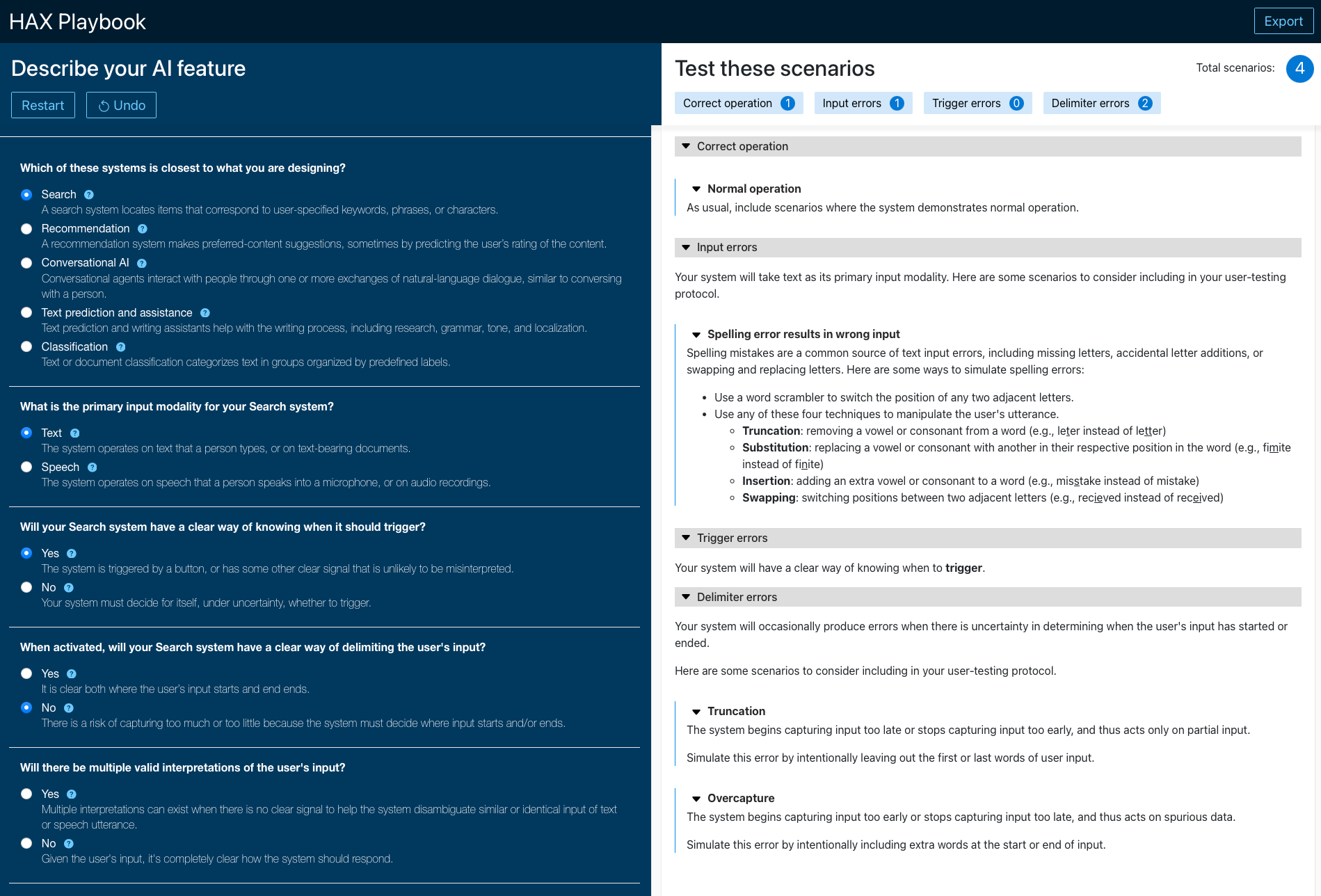

- HAX Playbook: The playbook is a tool for identifying common failures in human-AI interactions, specifically for applications using natural language processing (NLP) . Think of it as a catalog of “what can go wrong” when humans chat or interact with AI, along with strategies to mitigate those issues. For example, a common failure is the AI misunderstanding the user’s intent – the playbook would call that out and suggest mitigation like confirming with the user or offering options. Another failure might be the user over-trusting the AI’s answer, so the playbook would encourage you to consider showing uncertainty or linking to source data. By systematically exploring these failure modes, teams can plan features to prevent them (thus improving reliability and user satisfaction). Essentially, the HAX Playbook helps you proactively debug the human-AI interaction before your product ever launches.

Using the HAX Toolkit, teams can create AI experiences that are not only effective but also comfortable and trustworthy for users. It’s one thing for an AI model to be state-of-the-art, but if the user interface causes confusion or the AI behaves in opaque ways, users can still end up frustrated or misled. HAX Toolkit addresses this gap by focusing on UX and interaction design. For instance, by following the Guidelines and using the Workbook, a team building a virtual assistant might decide to always provide a justification for a recommendation (addressing Transparency), or to include a “Was this helpful?” feedback mechanism (addressing Accountability by letting users flag issues). These design elements greatly enhance the overall responsibility of the AI app, complementing the technical safeguards.

Conclusion

Building responsible AI applications is a multifaceted challenge, it spans technical mitigation (like filters and benchmarks) and thoughtful design (like user interaction guidelines). The good news is that we don’t have to reinvent the wheel. Microsoft’s Responsible AI principles give clear ethical targets to aim for, and their tools like Azure AI Foundry and the HAX Toolkit provide practical support to hit those targets. By learning from past mistakes (as seen in the chatbot failures above) and by leveraging these principles and tools, AI practitioners can develop applications that are not just innovative, but also trustworthy and respectful of the people they serve.

Responsible AI is really about caring for your users and the world, even as you dive into cutting-edge tech. As we implement fairness, safety, privacy, inclusiveness, transparency, and accountability in our AI projects, we’re essentially saying we want AI to benefit everyone and harm no one. It’s a journey and there will be bumps along the way, but with a strong framework and the right tooling, it’s absolutely achievable. And at the end of that journey lies AI that people can truly trust, adopt, and celebrate in their daily lives. That’s the kind of AI we all should aspire to create.